Ransomware Attack Leads to Oakland Mans Identity Theft

Ransomware attack leads to identity theft of an Oakland man – a chilling headline that underscores the growing threat of cybercrime. This story isn’t just about numbers and statistics; it’s about a real person whose life was turned upside down by a malicious attack. We’ll delve into the specifics of this case, exploring the methods used, the devastating consequences, and, most importantly, what you can do to protect yourself from a similar fate.

This isn’t just a cautionary tale; it’s a roadmap to digital security in an increasingly interconnected world.

The Oakland man, let’s call him John (for privacy reasons), became a victim after clicking on a seemingly innocuous email attachment. This triggered a ransomware attack that encrypted his files and, more disturbingly, stole a wealth of personal information. From banking details to social security numbers, John’s digital life was laid bare. The ensuing identity theft resulted in fraudulent credit card charges, attempts to open new accounts in his name, and a significant dent in his credit score.

His ordeal highlights the interconnected nature of data breaches and the far-reaching impact of ransomware attacks.

The Ransomware Attack

The recent ransomware attack targeting an Oakland man highlights the growing threat of cybercrime and the devastating consequences it can have on individuals. This incident underscores the importance of robust cybersecurity practices and the need for increased awareness among internet users. The attack likely involved sophisticated techniques, exploiting common vulnerabilities to gain access and encrypt the victim’s data.The likely methods employed in this ransomware attack probably involved a phishing email containing a malicious attachment or link.

This is a very common tactic, as many people are not trained to spot sophisticated phishing attempts. Alternatively, the attacker may have exploited a known vulnerability in the victim’s software, perhaps an outdated operating system or unpatched application, to gain unauthorized access to the system. The attacker could also have leveraged a compromised website or service the Oakland man frequently used, injecting malicious code through a drive-by download or similar method.

Regardless of the initial vector, the goal was the same: to infiltrate the system and deploy ransomware.

Vulnerabilities Exploited

The attackers likely exploited several vulnerabilities to successfully breach the Oakland man’s systems. These vulnerabilities could range from outdated software and operating systems lacking critical security patches to weak passwords and a lack of multi-factor authentication. Human error, such as clicking on malicious links in phishing emails or downloading infected files from untrusted sources, also played a significant role.

The absence of a robust firewall and antivirus software would have further increased the vulnerability of the system. For example, the use of an outdated version of Windows without automatic updates enabled could easily allow attackers to exploit known vulnerabilities like the EternalBlue exploit, famously used by the WannaCry ransomware. Similarly, a weak password, such as “password123”, would be easily cracked using readily available tools.

Impact on Computer Systems and Data

The ransomware attack likely resulted in the encryption of the Oakland man’s critical files, rendering them inaccessible without the decryption key held by the attackers. This could include personal documents, financial records, photos, and other irreplaceable data. The attack might have also compromised the functionality of his computer systems, potentially causing significant disruption to his work and personal life.

The encryption process could have severely degraded the performance of the system, making it nearly unusable. Beyond the direct impact on his data and computer, the attack may have caused significant emotional distress and financial losses due to the cost of recovery and potential identity theft. Consider the scenario where the victim’s tax information was encrypted, potentially leading to fraudulent tax returns being filed in his name.

Timeline of the Attack

The exact timeline of the attack is unknown without access to forensic data. However, a likely scenario might unfold as follows:

1. Initial Compromise

The attacker gains initial access through a phishing email, exploiting a software vulnerability, or through another means. This might have taken place over several days, weeks, or even months.

2. System Reconnaissance

The attacker explores the victim’s system to identify valuable data and assess the security measures in place.

3. Ransomware Deployment

The attacker deploys the ransomware, encrypting sensitive files and demanding a ransom for decryption.

4. Ransom Demand

The attacker provides instructions on how to pay the ransom, usually in untraceable cryptocurrency.

That Oakland man’s ransomware nightmare, resulting in identity theft, highlights the urgent need for robust data security. Thinking about how to build more secure systems got me researching better app development practices, which led me to check out this insightful article on domino app dev the low code and pro code future and its implications for security.

Hopefully, advancements like this can help prevent future incidents like the Oakland man’s ordeal.

5. Data Exfiltration (Possible)

In some cases, attackers exfiltrate data before encryption, creating a secondary threat of data exposure even if the ransom is paid.

6. Encryption Completion

The encryption process is completed, rendering the victim’s data inaccessible. The ransomware might also display a ransom note explaining the situation and the demand.

Identity Theft Ramifications

The ransomware attack on the Oakland man wasn’t just about the encrypted files; it was a devastating breach of his personal identity. The theft of his digital information opened the door to a cascade of potential problems, impacting his finances and creditworthiness for years to come. Understanding the ramifications of this type of identity theft is crucial for both victims and those seeking to prevent similar situations.

Types of Stolen Personal Information

The attackers likely gained access to a wide range of sensitive information. This could include the victim’s full name, address, date of birth, Social Security number, driver’s license number, passport information, credit card numbers, bank account details, medical records, and potentially even family members’ information. The more comprehensive the data breach, the greater the potential for long-term damage. The sheer volume of personal details accessible through a successful ransomware attack makes it a particularly dangerous crime.

Financial Consequences

The financial consequences of identity theft can be severe and far-reaching. The most immediate concerns are credit card fraud and bank account breaches. Attackers could use stolen credit card numbers to make unauthorized purchases, leaving the victim with significant debt and a damaged credit history. Similarly, access to bank account details could lead to direct theft of funds, leaving the victim financially vulnerable.

Beyond immediate losses, the cost of rectifying the damage, including credit monitoring services and legal fees, can quickly mount.

Long-Term Effects on Credit Score and Financial Stability

The long-term effects on the victim’s credit score and financial stability can be devastating. Even after fraudulent accounts are closed and debts are resolved, the negative marks on the victim’s credit report can persist for years, making it difficult to obtain loans, rent an apartment, or even secure certain jobs. Rebuilding credit after a significant identity theft incident requires considerable time, effort, and often professional assistance.

The financial instability caused by identity theft can have ripple effects, impacting the victim’s ability to manage other aspects of their life.

Examples of Identity Theft Misuse

Stolen identity information can be used in numerous ways by attackers. For example, they might open fraudulent credit accounts in the victim’s name, take out loans, file taxes fraudulently to receive refunds, or even use the stolen identity to commit more serious crimes, such as opening businesses under a false identity to launder money. The possibilities are unfortunately vast and can cause significant damage.

The attacker’s actions might not be immediately apparent, leading to a delay in discovery and making remediation more complex.

Types of Identity Theft and Associated Risks

| Type of Theft | Examples of Stolen Data | Potential Consequences | Mitigation Strategies |

|---|---|---|---|

| Credit Card Fraud | Credit card number, expiration date, CVV | Debt, damaged credit score, financial loss | Credit monitoring, secure online banking, report suspicious activity |

| Bank Account Fraud | Bank account number, routing number, online banking login credentials | Loss of funds, overdraft fees, damaged credit score | Strong passwords, two-factor authentication, regular bank statement review |

| Tax Identity Theft | Social Security number, address, date of birth | IRS penalties, tax liens, difficulty filing taxes | File taxes early, use IRS Identity Protection PIN, monitor tax records |

| Medical Identity Theft | Medical records, insurance information | Incorrect medical billing, denied insurance claims, medical debt | Review medical bills carefully, monitor health insurance statements, report suspicious activity |

Law Enforcement and Legal Aspects

Being a victim of a ransomware attack leading to identity theft is a devastating experience, but understanding your legal options and reporting procedures is crucial for recovery and preventing further harm. This section Artikels the legal recourse available, the steps to report the crime, and the role of cybersecurity insurance.

Legal Recourse for Victims

Victims of ransomware attacks and subsequent identity theft have several legal avenues to pursue. Civil lawsuits against the perpetrators (if identified) are possible to recover financial losses and damages resulting from the attack. This might include compensation for stolen funds, credit repair costs, legal fees, and emotional distress. Additionally, victims can file complaints with the Federal Trade Commission (FTC) and state attorney general’s offices to initiate investigations and potentially contribute to broader enforcement efforts against cybercriminals.

The victim may also have legal recourse against their own insurance providers, if their policy covers such incidents. Finally, it’s vital to remember that navigating the legal system can be complex, and consulting with a qualified attorney specializing in cybercrime and identity theft is highly recommended.

Reporting the Crime to Law Enforcement

Reporting the crime is a critical first step. Victims should promptly report the incident to their local police department and the FBI’s Internet Crime Complaint Center (IC3). The IC3 is a centralized resource for reporting cybercrimes, including ransomware attacks and identity theft. Providing detailed information to law enforcement, such as the type of ransomware used, the extent of data breach, and any financial losses incurred, is essential for effective investigation.

Law enforcement agencies may not always be able to directly recover stolen funds or prosecute the perpetrators, but their involvement helps track the criminals, build evidence for future prosecutions, and potentially prevent similar attacks. Maintaining meticulous records of all communications, financial transactions, and steps taken to mitigate the damage is crucial throughout this process.

The Role of Cybersecurity Insurance

Cybersecurity insurance can significantly mitigate the financial impact of ransomware attacks and identity theft. Many policies cover expenses related to data recovery, legal fees, credit monitoring, and notification costs to affected individuals. However, the specific coverage varies greatly depending on the policy. It’s crucial to carefully review your policy’s terms and conditions before an incident occurs to understand what is and isn’t covered.

For instance, some policies may have deductibles or exclusions that limit the amount of reimbursement. Choosing a reputable insurer with a comprehensive cyber insurance policy is a proactive measure that can protect individuals and businesses from significant financial losses during such attacks. The policy should clearly Artikel the process for filing a claim and the expected timeline for reimbursement.

A Step-by-Step Guide for Victims

Dealing with law enforcement and legal professionals after a ransomware attack and identity theft can be overwhelming. A structured approach is beneficial.

- Gather evidence: Collect all relevant information, including emails, transaction records, police reports, and any communication with the perpetrators.

- Report to law enforcement: File reports with your local police department and the IC3, providing detailed information about the incident.

- Contact your financial institutions: Report the theft to your banks, credit card companies, and other financial institutions to freeze accounts and prevent further losses.

- Initiate credit monitoring: Enroll in credit monitoring services to detect and address any fraudulent activities.

- Consult with legal counsel: Seek advice from an attorney specializing in cybercrime and identity theft to understand your legal options and pursue legal recourse.

- File insurance claims: If you have cybersecurity insurance, file a claim with your insurer, providing all necessary documentation.

Prevention and Mitigation Strategies

Protecting yourself from ransomware attacks and the subsequent identity theft they often trigger requires a proactive and multi-layered approach. This involves implementing strong security practices across all your digital devices and accounts. Failing to do so leaves you vulnerable to significant financial and personal harm.

The following best practices significantly reduce your risk of becoming a victim.

Best Practices for Ransomware Prevention

A robust defense against ransomware requires a combination of technical safeguards and responsible online behavior. These measures, when implemented consistently, form a strong barrier against attacks.

- Regularly back up your data: Store backups offline, ideally on an external hard drive kept in a separate location. This ensures you can restore your files even if your system is encrypted.

- Use strong, unique passwords: Avoid easily guessable passwords and use a password manager to generate and securely store complex passwords for each of your online accounts.

- Enable multi-factor authentication (MFA): MFA adds an extra layer of security by requiring a second form of verification, such as a code sent to your phone, beyond just your password.

- Keep your software updated: Regularly update your operating system, applications, and antivirus software to patch security vulnerabilities that attackers exploit.

- Be cautious of email attachments and links: Avoid opening suspicious emails or clicking on links from unknown senders. Phishing attempts are a common ransomware delivery method.

- Educate yourself about phishing scams: Learn to identify the common characteristics of phishing emails, such as poor grammar, urgent requests, and suspicious sender addresses.

- Use reputable software and websites: Download software only from trusted sources and avoid visiting untrusted websites.

- Install and maintain robust antivirus software: A good antivirus program can detect and block malicious software before it can infect your system.

Comprehensive Cybersecurity Plan for Identity Theft Minimization, Ransomware attack leads to identity theft of an oakland man

A comprehensive plan goes beyond simply preventing ransomware; it actively protects your personal information from various threats.

This plan should include:

- Password management: Use a strong, unique password for every online account and utilize a password manager to securely store them.

- Multi-factor authentication (MFA): Enable MFA wherever possible to add an extra layer of security to your accounts.

- Regular software updates: Keep your operating system, applications, and antivirus software updated with the latest security patches.

- Data backups: Regularly back up your important data to an offline location.

- Fraud monitoring services: Consider using a credit monitoring service to detect any suspicious activity on your accounts.

- Secure Wi-Fi practices: Only use trusted Wi-Fi networks and avoid using public Wi-Fi for sensitive transactions.

- Privacy settings: Review and adjust your privacy settings on social media and other online platforms.

- Regular security audits: Periodically review your security practices to identify and address any vulnerabilities.

Creating Strong and Unique Passwords

Strong passwords are crucial for online security. They should be long, complex, and unique to each account. Avoid using easily guessable information such as birthdays or pet names.

Consider these guidelines:

- Length: Aim for at least 12 characters.

- Complexity: Include a mix of uppercase and lowercase letters, numbers, and symbols.

- Uniqueness: Use a different password for every online account.

- Password manager: Use a reputable password manager to generate and securely store your passwords.

Multi-Factor Authentication Enhancement

Multi-factor authentication (MFA) adds a significant layer of security by requiring more than just a password to access your accounts. Even if your password is compromised, an attacker will still need access to your second factor (e.g., your phone) to gain entry.

Common MFA methods include:

- One-time codes (OTP): Codes sent via SMS or authenticator app.

- Biometrics: Fingerprint or facial recognition.

- Security keys: Physical devices that generate unique codes.

Importance of Regular Software Updates and Patching

Software updates often include critical security patches that address vulnerabilities that attackers could exploit. Regularly updating your software is crucial to minimize your risk of infection.

This includes:

- Operating system: Regularly update your Windows, macOS, or Linux operating system.

- Applications: Keep all your applications, including web browsers, email clients, and office software, up-to-date.

- Antivirus software: Ensure your antivirus software is always running and updated with the latest virus definitions.

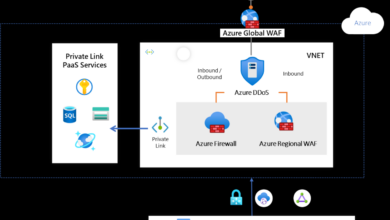

Visual Representation of a Robust Cybersecurity Defense System

Imagine a castle with multiple layers of defense. The outermost layer is a strong wall representing preventative measures like firewalls and antivirus software. The next layer is a moat representing strong passwords and multi-factor authentication. Inside, there are multiple guards (regular software updates and security awareness training) patrolling the castle walls. At the very center is the king (your valuable data), well-protected and backed up regularly to an offsite location (a separate castle).

The Role of Cybersecurity Awareness: Ransomware Attack Leads To Identity Theft Of An Oakland Man

The recent ransomware attack and subsequent identity theft in Oakland highlight a critical need: robust cybersecurity awareness. While sophisticated technology plays a crucial role in protection, human error remains a significant vulnerability exploited by cybercriminals. Investing in comprehensive cybersecurity awareness training for both individuals and organizations is no longer a luxury but a necessity in today’s digital landscape.

Without a proactive and educated approach, individuals and businesses remain highly susceptible to various cyber threats.Cybersecurity awareness training empowers individuals and organizations to recognize and respond effectively to emerging threats. It fosters a culture of security, encouraging proactive behavior and minimizing the likelihood of successful attacks. This includes understanding the risks associated with various online activities and implementing preventative measures to safeguard personal and organizational data.

Common Phishing Techniques

Phishing attacks are a primary method used by attackers to gain unauthorized access. These attacks often involve deceptive emails, text messages, or websites designed to trick individuals into revealing sensitive information, such as usernames, passwords, credit card details, or social security numbers. Common techniques include impersonating legitimate organizations (e.g., banks, government agencies), creating a sense of urgency (e.g., “Your account has been compromised”), or using emotionally manipulative language.

For instance, a phishing email might appear to be from a well-known online retailer, notifying the recipient of a supposed shipping problem and requesting them to click a link to update their information. This link often leads to a fake website that harvests the victim’s credentials.

Social Engineering Tactics

Social engineering exploits human psychology to manipulate individuals into divulging confidential data or performing actions that compromise security. Attackers often build rapport and trust with their victims, using techniques like pretexting (creating a false scenario to gain information), baiting (offering something enticing to lure the victim), or quid pro quo (offering something in exchange for information). A common example is a phone call from someone claiming to be from the IT department, asking for the victim’s password to “troubleshoot a problem.” The attacker’s charm and perceived authority can lead the victim to unwittingly reveal sensitive information.

Types of Ransomware Attacks and Their Impact

Ransomware attacks vary in their methods and impact. Some target individual users, encrypting their personal files and demanding a ransom for their release. Others target organizations, encrypting critical data and disrupting operations. The impact can range from financial losses due to ransom payments and data recovery costs to reputational damage and legal liabilities. For example, a CryptoLocker attack might encrypt all files on a user’s computer, rendering them inaccessible until a ransom is paid.

A more sophisticated attack on an organization could cripple its entire IT infrastructure, leading to significant downtime and financial losses. The Oakland incident serves as a stark reminder of the devastating consequences. The type of ransomware used, the level of encryption, and the attacker’s sophistication all contribute to the overall impact.

Conclusive Thoughts

John’s experience serves as a stark reminder of the ever-present threat of ransomware and identity theft. While the emotional and financial toll can be immense, there are steps we can all take to bolster our online defenses. By understanding the methods used by attackers, implementing robust security measures, and staying vigilant, we can significantly reduce our vulnerability. It’s not about living in fear, but about being informed and proactive in protecting ourselves and our data in this digital age.

Remember, your digital security is your responsibility.

FAQ Overview

What types of ransomware are most common?

Several types exist, including encrypting ransomware (the most common, locking your files), locker ransomware (locking your entire system), and wipers (destroying your data).

Can I recover my data after a ransomware attack?

Sometimes, yes. If you have backups, restoring from them is the best option. Professional data recovery services might help, but paying the ransom is generally not recommended.

How long does it take to recover from identity theft?

Recovery can take months or even years, involving credit report monitoring, fraud alerts, and contacting various institutions to resolve fraudulent activities.

Should I report a ransomware attack to the police?

Absolutely. Reporting the crime is crucial for investigations and helps law enforcement track down perpetrators. The FBI’s Internet Crime Complaint Center (IC3) is a good resource.