Why Take an Enterprise Software Portfolio Cloud Native?

Why take an enterprise software portfolio cloud native? That’s the million-dollar question many businesses are grappling with today. The answer, however, isn’t a simple yes or no. It’s about understanding the profound shift in how we build, deploy, and manage software, a shift that promises increased agility, scalability, and cost efficiency. This journey delves into the core reasons why embracing a cloud-native approach for your enterprise software is no longer a luxury, but a necessity in today’s rapidly evolving digital landscape.

This post will explore the key benefits, from accelerating time-to-market and boosting scalability to enhancing resilience and slashing operational costs. We’ll also tackle common concerns around security and compliance, providing a balanced perspective on the challenges and rewards of this transformative approach. Get ready to discover how a cloud-native strategy can revolutionize your enterprise software and propel your business to new heights!

Increased Agility and Faster Time to Market

Migrating to a cloud-native architecture offers significant advantages in terms of speed and efficiency for enterprise software development and deployment. The ability to rapidly iterate, adapt, and respond to market demands is paramount in today’s competitive landscape, and cloud-native principles are key to achieving this agility. This increased speed isn’t just about faster deployments; it’s about enabling a more responsive and innovative organization.Cloud-native architectures, built upon microservices and supported by robust CI/CD pipelines, dramatically reduce the time it takes to get new features and updates into the hands of users.

This allows businesses to respond more quickly to changing market conditions, customer feedback, and emerging opportunities. The decoupled nature of microservices and automated deployment processes significantly minimize the risk and complexity associated with traditional monolithic deployments.

Microservices and Quicker Releases, Why take an enterprise software portfolio cloud native

Microservices break down large applications into smaller, independently deployable units. This modularity means that changes to one part of the system don’t require a complete redeployment of the entire application. For example, imagine an e-commerce platform. With a microservices architecture, updating the payment gateway doesn’t necessitate downtime for the product catalog or user accounts. Teams can work independently on different microservices, leading to parallel development and faster release cycles.

This allows for more frequent, smaller releases, making it easier to test and roll back changes if necessary, reducing overall risk. The ability to deploy updates incrementally also allows for A/B testing and the gradual rollout of new features to minimize disruption and gather user feedback.

Continuous Integration and Continuous Delivery (CI/CD)

CI/CD is the backbone of a fast-paced cloud-native development process. CI involves automatically building and testing code changes as they are committed, ensuring early detection of integration issues. CD automates the deployment process, moving code from development through testing and into production with minimal manual intervention. This automation drastically reduces the time spent on manual processes, allowing for much more frequent deployments.

The use of automated testing throughout the CI/CD pipeline also improves the quality of the software, minimizing the risk of bugs making it into production. Furthermore, a robust CI/CD pipeline enables teams to experiment with new features and technologies more readily, accelerating innovation.

Deployment Time Comparison: Traditional vs. Cloud-Native

The difference in deployment times between traditional and cloud-native approaches is substantial. The following table illustrates this contrast, showing hypothetical deployment times for a moderately sized application update:

| Deployment Method | Testing Time | Deployment Time | Total Time |

|---|---|---|---|

| Traditional Monolithic | 2-3 days | 1-2 days | 3-5 days |

| Cloud-Native Microservices with CI/CD | 1-2 hours | 15-30 minutes | 1.5-3 hours |

Enhanced Scalability and Elasticity

One of the most compelling reasons to embrace a cloud-native enterprise software portfolio is the inherent scalability and elasticity it offers. Unlike traditional monolithic applications, cloud-native architectures, built on microservices and containers, allow for incredibly flexible and efficient resource management, adapting seamlessly to fluctuating demands. This dynamic scalability translates directly into cost savings and improved user experience.Cloud-native principles empower applications to scale effortlessly based on real-time needs.

This is achieved through the use of techniques like auto-scaling, where the system automatically adjusts the number of instances running based on metrics such as CPU utilization, memory consumption, and request volume. This ensures optimal performance during peak loads while avoiding wasted resources during periods of low activity.

Auto-Scaling and Resource Optimization

Auto-scaling dramatically reduces infrastructure costs by only provisioning the resources necessary at any given moment. Imagine a popular e-commerce site experiencing a surge in traffic during a major sale. With traditional infrastructure, the company would need to over-provision resources to handle the peak load, resulting in significant idle capacity and wasted expenditure during off-peak hours. A cloud-native approach, however, would automatically spin up additional instances to handle the increased traffic, scaling down just as quickly when the surge subsides.

This dynamic allocation ensures that resources are used efficiently, minimizing costs and maximizing return on investment. For example, a company like Netflix relies heavily on auto-scaling to handle the massive fluctuations in user demand for its streaming services.

Horizontal vs. Vertical Scaling

In a cloud-native context, horizontal scaling – adding more instances of the application – is significantly more efficient than vertical scaling – increasing the resources (CPU, memory, etc.) of a single instance. Vertical scaling has limitations; there’s a practical limit to how much you can scale a single instance before hitting performance bottlenecks. Horizontal scaling, on the other hand, allows for virtually limitless scalability by simply adding more, smaller instances.

This also enhances fault tolerance; if one instance fails, the others continue to operate, ensuring high availability. Consider a large database application. Vertical scaling might involve upgrading to a more powerful server, a process that can be time-consuming and disruptive. Horizontal scaling, however, could involve adding more database nodes to a cluster, distributing the load and minimizing downtime.

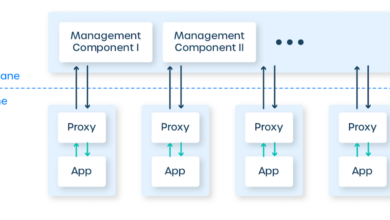

Illustrative Diagram of Cloud-Native Scaling

Imagine a diagram depicting a cloud-native application represented as a collection of independent microservices, each running in its own container. These containers are orchestrated by a container orchestration platform like Kubernetes. At the bottom of the diagram, we see a pool of virtual machines or serverless functions in a cloud provider’s infrastructure. As demand increases (represented visually by an upward-trending graph), the orchestration platform automatically deploys more containers, drawing resources from the pool.

These new containers seamlessly join the application, distributing the load and ensuring continued performance. Conversely, as demand decreases (a downward-trending graph), the platform automatically scales down, removing unnecessary containers and freeing up resources. The diagram visually emphasizes the dynamic and automated nature of the scaling process, highlighting the independent nature of the microservices and their ability to scale individually based on their specific needs.

This contrasts sharply with a monolithic application where scaling requires scaling the entire application as a single unit, a far less efficient and flexible approach.

Improved Resilience and Fault Tolerance

Moving your enterprise software portfolio to a cloud-native architecture offers significant improvements in resilience and fault tolerance, far exceeding what’s possible with traditional monolithic applications. This enhanced robustness stems from the inherent design principles of cloud-native systems, focusing on independent, microservices-based deployments. Let’s explore how this translates to practical benefits.Cloud-native architectures achieve improved resilience through a combination of key architectural components and operational practices.

The distributed nature of these systems, combined with automation and intelligent monitoring, allows for rapid recovery from failures, minimizing downtime and ensuring business continuity.

Going cloud-native with your enterprise software portfolio offers incredible scalability and agility. This is especially true when you consider modern development approaches; for instance, check out this great article on domino app dev the low code and pro code future to see how it streamlines development. Ultimately, a cloud-native strategy allows for faster innovation and better response to changing business needs, making it a smart move for any forward-thinking company.

Key Components of a Resilient Cloud-Native Architecture

A resilient cloud-native architecture relies on several interconnected components working in harmony. These components, when properly implemented, provide multiple layers of protection against failures and ensure continued operation even in the face of unexpected events. Effective monitoring and automated responses are crucial for maintaining resilience.

- Microservices Architecture: Breaking down applications into small, independent services isolates failure points. If one service fails, others continue to operate.

- Containerization (e.g., Docker): Packaging applications and their dependencies into containers provides consistent execution environments across different infrastructure. This simplifies deployment and enhances portability.

- Orchestration (e.g., Kubernetes): Orchestration platforms automate the deployment, scaling, and management of containers. They ensure high availability by automatically restarting failed containers and distributing workloads across multiple nodes.

- Service Mesh (e.g., Istio, Linkerd): Service meshes provide advanced traffic management, security, and observability capabilities for microservices. They enable features like circuit breaking, retries, and fault injection for enhanced resilience.

- Declarative Infrastructure as Code (IaC): Managing infrastructure through code allows for consistent and repeatable deployments, reducing the risk of human error and improving reliability.

- Monitoring and Logging: Comprehensive monitoring and logging provide real-time visibility into application health and performance, enabling proactive identification and resolution of issues.

Containerization and Orchestration’s Contribution to Fault Tolerance

Containerization and orchestration are cornerstones of fault-tolerant cloud-native systems. Containers provide a lightweight and isolated execution environment for applications, preventing conflicts and ensuring consistent behavior. Orchestration platforms like Kubernetes go further by automating the management of these containers, ensuring high availability and automatic recovery from failures.For example, if a container crashes, Kubernetes automatically detects the failure, restarts the container on a healthy node, and reroutes traffic accordingly.

This happens without any manual intervention, ensuring minimal disruption to the application. The self-healing capabilities of Kubernetes significantly reduce downtime and improve overall system resilience.

Service Mesh Technologies and Application Reliability

Service meshes enhance application reliability by providing advanced traffic management and observability features for microservices. They act as an infrastructure layer that sits between microservices, enabling features such as:

- Circuit Breaking: Prevents cascading failures by stopping requests to failing services.

- Retries: Automatically retries failed requests to improve the success rate of operations.

- Fault Injection: Simulates failures to test the resilience of the system and identify weaknesses.

- Traffic Routing: Directs traffic to healthy instances of a service, avoiding unhealthy ones.

- Observability: Provides detailed insights into the communication patterns and health of microservices.

These capabilities enable developers to build more resilient applications and reduce the impact of failures. A service mesh essentially acts as a sophisticated “traffic cop” for microservices, ensuring smooth and reliable communication even under stress.

Failure Recovery Mechanisms: Traditional vs. Cloud-Native

Let’s compare the failure recovery mechanisms employed in traditional and cloud-native systems:

- Traditional Systems: Typically rely on manual intervention and often involve lengthy downtime for recovery. Recovery processes are usually complex and time-consuming, potentially impacting business operations significantly.

- Cloud-Native Systems: Employ automated self-healing mechanisms, minimizing downtime and ensuring rapid recovery. Failures are detected and addressed automatically, with minimal human intervention required. This leads to faster recovery times and improved business continuity.

For instance, a traditional application failure might require a manual restart, database recovery, and application reconfiguration, potentially taking hours. In contrast, a cloud-native application, using Kubernetes and a service mesh, might automatically restart failed containers within minutes, with minimal impact on users.

Cost Optimization and Reduced Operational Overhead

Migrating your enterprise software portfolio to a cloud-native architecture isn’t just about improved agility and resilience; it’s also a powerful lever for significant cost savings. By optimizing resource utilization and automating operational tasks, cloud-native approaches can dramatically reduce your total cost of ownership (TCO) compared to traditional on-premise solutions. This translates to freeing up budget for innovation and strategic initiatives.Cloud-native architectures fundamentally change how we think about infrastructure costs.

Instead of investing heavily in upfront capital expenditures (CapEx) for hardware, software licenses, and data centers, cloud-native solutions leverage operational expenditures (OpEx) through pay-as-you-go models. This shift allows businesses to scale resources up or down based on actual demand, avoiding the waste associated with over-provisioning or underutilized capacity.

Infrastructure Cost Reduction with Cloud-Native Approaches

The pay-as-you-go model inherent in cloud computing is a game-changer. You only pay for the compute, storage, and network resources you actually consume. This contrasts sharply with traditional on-premise setups where you need to purchase and maintain significant hardware capacity to handle peak loads, even if that capacity sits idle for much of the time. For example, a company experiencing seasonal demand fluctuations can significantly reduce infrastructure costs by scaling resources up during peak periods and down during slower times.

This dynamic scalability minimizes wasted resources and directly impacts the bottom line. Furthermore, the elimination of on-premise hardware maintenance, including cooling, power, and physical security, results in substantial cost savings.

Pay-as-You-Go Models and Total Cost of Ownership (TCO)

Let’s consider a hypothetical scenario: a company with a traditional on-premise application requiring 10 servers costing $10,000 each, plus ongoing maintenance costs of $2,000 per server annually. Their annual infrastructure cost is $120,000. By migrating to a cloud-native architecture, they might only need 5 virtual machines (VMs) during peak demand, costing $500 per month each, totaling $3,000 per month or $36,000 annually.

Even accounting for additional cloud services, the savings are substantial. This demonstrates how the pay-as-you-go model dramatically reduces the TCO. Another example would be a retail company with a spike in online orders during the holiday season; they can easily scale their cloud infrastructure to handle the increased traffic without needing to invest in extra hardware that sits idle for the rest of the year.

Operational Overhead Reduction through Automation and DevOps

Cloud-native practices emphasize automation and DevOps principles. Automated deployments, continuous integration/continuous delivery (CI/CD) pipelines, and infrastructure-as-code (IaC) significantly reduce manual intervention, minimizing operational errors and freeing up IT staff to focus on higher-value tasks. Automated monitoring and logging systems provide real-time insights into application performance, enabling proactive issue resolution and preventing costly downtime. For example, automated scaling based on real-time metrics ensures optimal resource utilization and prevents performance bottlenecks.

This automated approach reduces the need for large, dedicated IT operations teams, leading to further cost savings.

Cost Comparison: Traditional vs. Cloud-Native Solutions

| Cost Category | Traditional On-Premise | Cloud-Native | Notes |

|---|---|---|---|

| Hardware | High upfront investment, ongoing maintenance | Low or no upfront investment, pay-as-you-go | Significant capital expenditure (CapEx) vs. operational expenditure (OpEx) |

| Software Licenses | High upfront cost, potential for license compliance issues | Subscription-based, often pay-per-use | Reduced licensing complexities and costs |

| Personnel | Large IT operations team required | Smaller team, focus on strategic initiatives | Automation reduces manual effort |

| Power & Cooling | Significant ongoing costs | Included in cloud provider costs | Eliminates on-premise infrastructure management |

Improved Innovation and Faster Development Cycles

Cloud-native platforms represent a significant leap forward for enterprise software development, dramatically accelerating innovation and shrinking development cycles. By decoupling applications into microservices and leveraging containerization and orchestration, organizations can achieve a level of agility previously unimaginable. This allows for faster iteration, quicker responses to market demands, and ultimately, a more competitive edge.The inherent flexibility and scalability of cloud-native architectures empower developers to experiment more freely and iterate more rapidly.

This is achieved through a combination of streamlined processes, automated workflows, and a culture that embraces continuous improvement. The result is a faster path from concept to deployment, leading to quicker time-to-market and increased business value.

Accelerated Development with Cloud-Native Services

Cloud-native services such as serverless functions, managed databases, and message queues significantly reduce the time and effort required for development. Developers can focus on building core business logic instead of managing infrastructure. For instance, serverless functions allow developers to deploy code without worrying about server provisioning and scaling, while managed databases provide readily available, scalable database solutions. This shift from infrastructure management to application development frees up valuable developer time and resources, leading to faster development cycles.

The Synergistic Role of DevOps and Agile Methodologies

DevOps practices and agile methodologies are intrinsically linked to the success of cloud-native environments. DevOps emphasizes collaboration and automation throughout the software development lifecycle, from development to deployment and operations. Agile methodologies, with their iterative approach and focus on delivering value quickly, align perfectly with the rapid iteration capabilities of cloud-native platforms. Together, they create a feedback loop that continuously improves the development process, leading to faster delivery and higher quality software.

For example, continuous integration and continuous delivery (CI/CD) pipelines, a cornerstone of DevOps, automate the build, test, and deployment process, ensuring rapid and reliable releases.

Fostering a Culture of Experimentation and Rapid Iteration

Cloud-native technologies foster a culture of experimentation and rapid iteration by lowering the barrier to entry for trying new things. The ease of deploying and scaling microservices allows developers to test new features and functionalities quickly and safely, without impacting the entire application. This reduces the risk associated with experimentation, encouraging a more innovative approach to software development. Moreover, the ability to quickly roll back changes minimizes the impact of failures, creating a safe space for experimentation.

For example, A/B testing becomes significantly easier and faster with microservices, allowing businesses to test different versions of a feature and measure their impact on user engagement almost instantly.

Enhanced Security and Compliance: Why Take An Enterprise Software Portfolio Cloud Native

Migrating your enterprise software portfolio to a cloud-native architecture offers significant advantages, but it also introduces new security considerations. The distributed nature of cloud-native applications, coupled with the reliance on third-party services, necessitates a robust and proactive security strategy. Failing to address these concerns can expose your organization to significant risks, including data breaches, compliance violations, and reputational damage.

Let’s delve into the key aspects of securing your cloud-native environment.Security considerations when migrating to a cloud-native architecture are multifaceted. Traditional perimeter-based security models are less effective in the dynamic and distributed landscape of cloud-native environments. Microservices communicate across multiple networks, potentially increasing the attack surface. Furthermore, the use of containers and orchestration platforms introduces new vulnerabilities that need to be carefully managed.

Maintaining visibility and control across this complex ecosystem is crucial. Effective security strategies must account for the unique challenges posed by each component, from the underlying infrastructure to the individual microservices. A shift in mindset from securing the perimeter to securing the application itself is essential.

Securing Cloud-Native Applications and Infrastructure

Implementing robust security measures is paramount throughout the cloud-native application lifecycle. This includes securing the infrastructure layer (e.g., Kubernetes clusters, cloud providers), container images, and the applications themselves. Employing a multi-layered security approach, combining preventative and detective controls, is highly recommended. This might involve implementing network segmentation, using robust access control mechanisms, and leveraging technologies like service meshes to enhance security and observability.

Regular security audits and penetration testing are vital for identifying and mitigating vulnerabilities. Automated security scanning of container images before deployment helps prevent the introduction of known vulnerabilities into the production environment. Furthermore, employing a DevSecOps approach, integrating security practices throughout the software development lifecycle, is crucial for building secure cloud-native applications from the ground up. For instance, integrating security testing into CI/CD pipelines helps catch vulnerabilities early, reducing the risk and cost of remediation.

Identity and Access Management (IAM) in Cloud-Native Security

IAM plays a central role in securing cloud-native environments. Fine-grained access control, based on the principle of least privilege, is crucial for limiting the potential impact of security breaches. Robust authentication mechanisms, such as multi-factor authentication (MFA), are essential for preventing unauthorized access. IAM solutions should integrate seamlessly with various cloud-native components, including Kubernetes, container registries, and service meshes.

Centralized identity management systems can simplify user and service account management, improving overall security posture. Regular reviews of user permissions and access rights are vital to ensure that only authorized personnel have access to sensitive resources. For example, using role-based access control (RBAC) within Kubernetes allows administrators to define granular permissions for different users and services, preventing unauthorized access to sensitive cluster components.

Security Measures Checklist for Cloud-Native Enterprise Software

A comprehensive security strategy for cloud-native enterprise software requires a multi-faceted approach. The following checklist highlights key security measures:

- Implement a robust CI/CD pipeline with integrated security scanning and testing.

- Utilize a secure container registry with image signing and vulnerability scanning.

- Employ a service mesh for enhanced security and observability.

- Implement strong network segmentation and access control policies.

- Leverage secrets management tools to protect sensitive information.

- Employ a centralized IAM solution with strong authentication and authorization mechanisms.

- Regularly conduct security audits and penetration testing.

- Implement monitoring and logging to detect and respond to security incidents.

- Ensure compliance with relevant industry regulations and standards (e.g., GDPR, HIPAA).

- Develop and maintain a comprehensive incident response plan.

Final Conclusion

Ultimately, the decision to embrace a cloud-native approach for your enterprise software portfolio is a strategic one. It requires careful consideration of your specific needs, resources, and risk tolerance. However, the potential benefits—from increased agility and scalability to reduced costs and improved resilience—are too significant to ignore. By understanding the key advantages and addressing potential challenges proactively, you can pave the way for a more efficient, resilient, and innovative software ecosystem.

So, are you ready to take the leap?

FAQs

What are the biggest risks associated with migrating to a cloud-native architecture?

The biggest risks include potential security vulnerabilities if not properly addressed, the complexity of managing a distributed system, and the need for significant upfront investment in training and infrastructure.

How long does it typically take to migrate an existing enterprise software portfolio to a cloud-native architecture?

Migration timelines vary greatly depending on the size and complexity of the portfolio. It can range from several months to several years.

What kind of expertise is needed to manage a cloud-native environment?

You’ll need expertise in areas like containerization (Docker, Kubernetes), DevOps practices, cloud platforms (AWS, Azure, GCP), and security best practices.

What are some common misconceptions about cloud-native architectures?

A common misconception is that it’s a purely technical endeavor. Successful cloud-native adoption requires a cultural shift towards DevOps and agile methodologies, impacting people and processes as much as technology.